Multiple linear regression in Mathematica

Continuing my slow journey through The Art of Computer Systems Performance Analysis, I've reached chapter 15, "Other Regression Models". I'll cover the first part of the chapter on multiple linear regression models. The definition of multiple linear regression from the textbook:

A multiple linear regression model allows one to predict a response variable as a function of predictor variables using a linear model of the following form:

Given a sample of observations, the model consists of the following equations:

In vector notation, we have:

or

Box 15.1 contains the formulas relevant to multiple linear regression:

-

Model: or in matrix notation where:

- is a column vector with elements

- is a column vector of observed values of

- is an row by column matrix whose th element if else

-

Parameter estimation:

-

Allocation of variation:

-

Coefficient of determination:

-

Coefficient of multiple correlation

-

Degrees of freedom:

-

Analysis of variance (ANOVA): ;

Regression is significant if is greater than .

-

Standard deviation of errors: .

-

Standard error of regression parameters: where is the diagonal term of

-

Prediction: Mean of future observations:

Or in vector notation: ; here

-

Standard deviation of predictions:

-

All confidence intervals are computed using .

-

Correlation among predictions:

-

Model assumptions

- Errors are independent and identically distributed normal variations with zero mean.

- Errors have the same variance for all values of the predictors.

- Errors are additive.

- 's and are linearly related.

- 's are nonstochastic (i.e not random) and are measured without error.

-

Visual tests:

- Scatter plot of errors versus predicted response should not have any trend.

- The normal quantile-quantile plot of errors should be linear.

Mathematica commands¶

Define our data. Each tuple in the list has form :

data = {

{14, 70, 2},

{16, 75, 5},

{27, 144, 7},

{42, 190, 9},

{39, 210, 10},

{50, 235, 13},

{83, 400, 20}

}

Create a linear model:

lm = LinearModelFit[data, {x1, x2}, {x1, x2}]

-

ANOVA table:

lm[

"ANOVATable" ] -

Mean of response variable, :

N[Mean[lm[

"Response" ]]] == 9.42857 -

SSR, sum of squares due to regression:

lm[

"SequentialSumOfSquares" ] == {199.845, 0.568796} -

Coefficient of determination :

lm[

"RSquard" ] == 0.974236 -

The predicted response variables for each sample, :

lm[

"PredictedResponse" ] == {3.3, 3.7, 6.8, 9.8, 10.0, 11.9, 20.2} -

The residual (error) for each data point in the sample, :

lm[

"FitResiduals" ] == {-1.3, 1.2, 0.15, -0.84, -0.015, 1.02, -0.25} -

Regression parameters :

lm[

"BestFitParameters" ] == {-0.161, 0.118, 0.0265} -

The standard error of the regression parameters:

lm[

"ParameterErrors" ] == {0.91, 0.19, 0.040} -

and , the confidence intervals for regression parameters:

(* Default confidence interval is 0.95. *) lm = LinearModelFit[data, {x1, x2}, {x1, x2}, ConfidenceLevel -> 0.95] lm["ParameterConfidenceIntervals" ](* {{-2.69759, 2.37469}, {-0.416515, 0.652989}, {-0.0858019, 0.138805}} *) -

Prediction for observations at 90% confidence level for both many observations and a single observation.

lm = LinearModelFit[data, {x1, x2}, {x1, x2}, ConfidenceLevel -> 0.9](* for many observations *) lm["MeanPredictionBands" ] /. {x1 -> 100, x2 -> 550}(* {19.5571, 32.919} *) (* for a single observation *) lm["SinglePredictionBands" ] /. {x1 -> 100, x2 -> 550}(* {19.1207, 33.3554} *) -

Mean square values for all regression parameters and the error:

lm[

"ANOVATableMeanSquares" ] == {199.845, 0.568796, 1.325} -

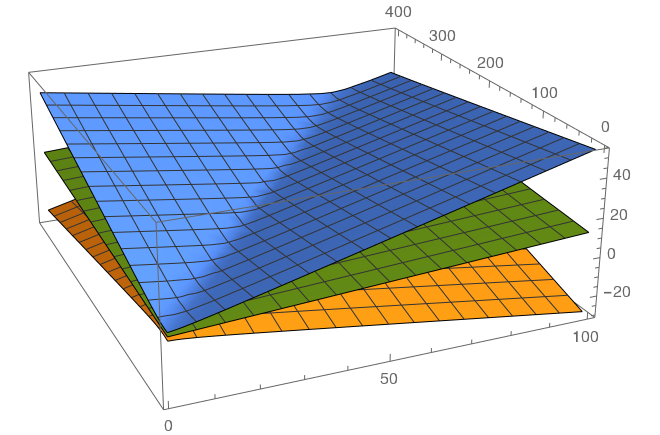

Plot the mean regression response with the confidence intervals:

planes = Append[lm[

"MeanPredictionBands" ], lm["BestFit" ]]; Plot3D[planes, {x1, 1, 20}, {x2, 1, 1000}]

Figure: Regression prediction graph with 95% confidence interval graphs. -

The correlation matrix, showing for every pair of

lm[

"CorrelationMatrix" ] // MatrixForm